Núcleo Português do

Museu da Pessoa

Querying and Visualizing

The modules that compose the second part of the system [M2] retrieve information from the RDF TripleStore to create the museum web pages. This task is performed by the Virtual Learning Spaces Generator [M2].

The Virtual Learning Spaces Generator needs to send queries and process the returned data, to display in the Virtual Learning Spaces [VLS] the information stored in the TripleStore [DS].

To run SPARQL queries, sent by the Query Processor, it is necessary to resorted to a SPARQL Endpoint. The SPARQL Endpoint used was Apache Jena Fuseki (version 2.0).

Some queries were built to test whether the answer is the expected or not. Examples of the queries issued are: 1. Interviewee and respective Project; 2. Interviewee and his photos; 3. Interviewee, his profession, marital status and spouse’s name; 4. Interviewee and his events; 5. Interviewee by sex and residence; 6. Interviewee by type of episode; 7. Total Interviewee by Project; 8. Life story of Interviewee. All these queries were executed successfully in Fuseki web interface.

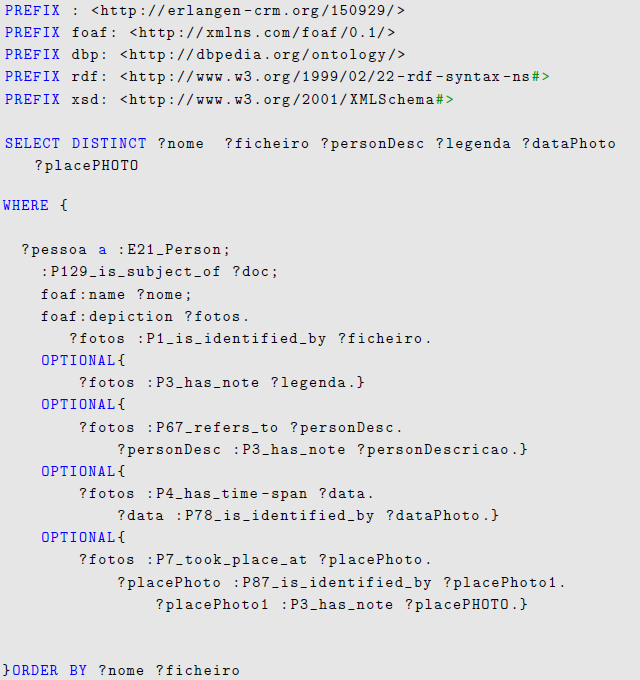

The code block between lines 10 and 14 of Figure 1 is intended to search for all respondents (E21 Person) and photos in which each one is represented (foaf:depiction). The full name of each interviewee is described by the property foaf:name.

Actually the photos does not contain all the possible properties that would be desirable to retrieve. Therefore, in the query the argument OPTIONAL is used to cope properly with that variability in the legend (lines 15-16), in the description (lines 17-19), in the date (lines 20-22) and in the photo place (lines 23-26).

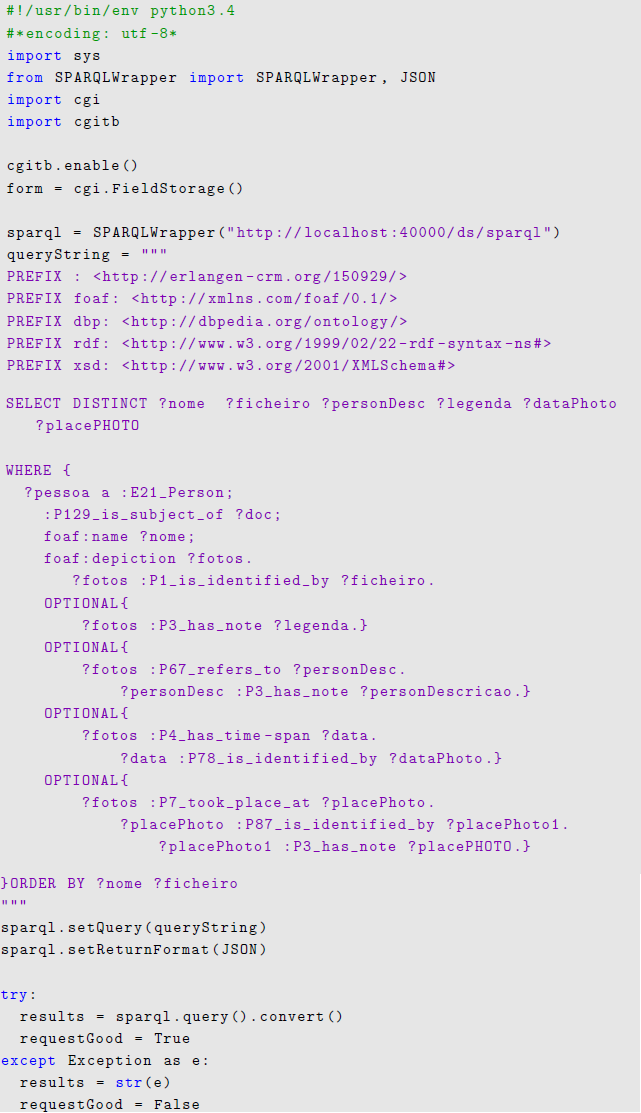

Reusing the SPARQL queries manually created, that script selects those needed for a concrete request and sends the queries to the SPARQL endpoint. It was created a Python script that reuses the SPARQL queries manually created, that script selects those needed for a concrete request and sends them to the Fuseki (SPARQL Endpoint) and after receiving the answer, combines the data returned to configure the Virtual Learning Spaces [VLS]. This Python script also includes HTML (Hyper Text Markup Language) and CSS (Cascading Style Sheets) to create and format the web pages.

This Python script uses the SPARQLWrapper library to query the SPARQL endpoint about all Interviewee and his photos (query shown in Figure 1). It requests the results in a JSON (JavaScript Object Notation) format so that it can easily iterate through the returned data. After preparing the SPARQL query to send off, the script sends the query within a try/except block so that it can check for communication problems before attempting to render the results.

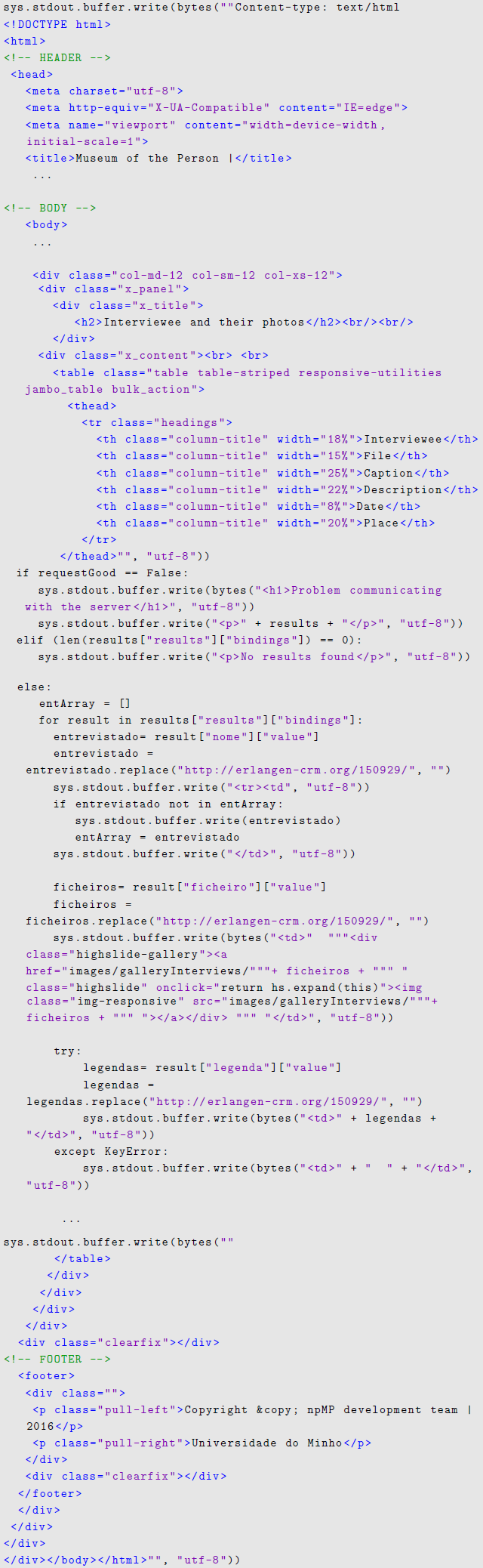

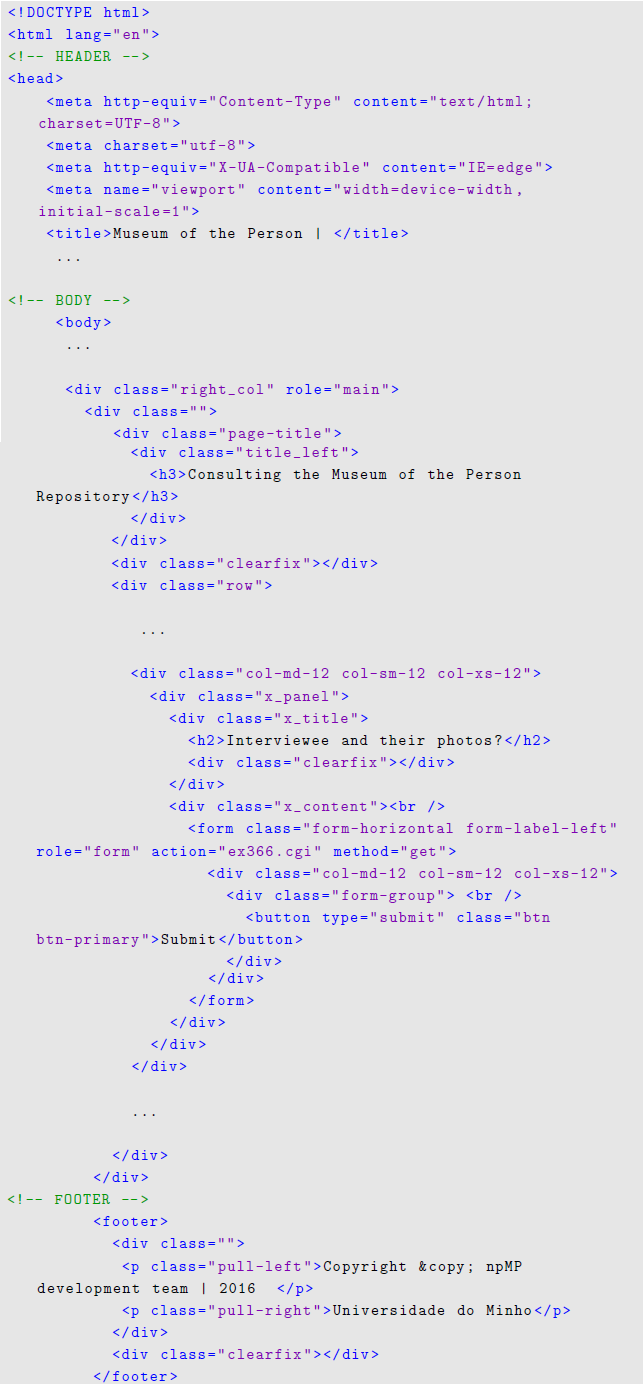

As is a CGI (Common Gateway Interface) script, it creates HTML and sends it to a browser, a Content-type header for sending the actual web page. Figure 3 shows HTML and CSS code to create and format the web page. The listing illustrates (using code fragments) the three main parts: creation of the header, body and footer of the web page. The script also includes Python code to return the results of the query.

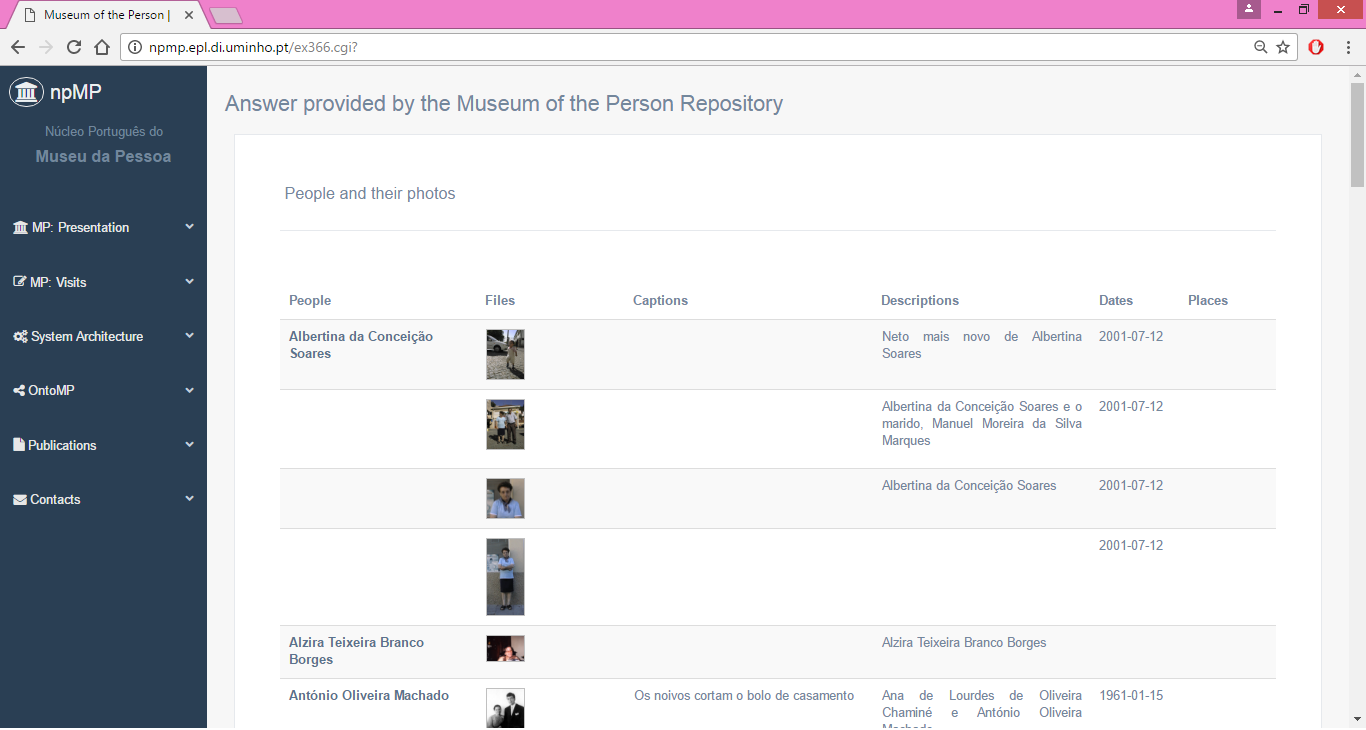

Figure 4 displays the web page created by Python script (Figure 2 and 3). In other words, it shows the response to the query: (2.) Interviewee and his photos.

The next step was to create an web form to execute the query presented in Python script (Figure 2 and 3). Figure 5 shows the web form, in HTML, to list the Interviewee and his photos. This listing also illustrates (using code fragments) the three main parts: creation of the header, body and footer of the web page.

Figure 6 displays the web page created in HTML (Figure 5).